Readers of The Shift will have noticed that the Disinformation Watch series may address a disinformation tactic in more than one article. That is not because we would have forgotten what had been published but because falsehoods, misconceptions, and mistaken beliefs have a tendency to stick long after they have been corrected.

But what if instead of fact-checking disinformation reactively, we looked at the building blocks of disinformation and dealt with them pre-emptively? This is the idea behind a series of games launched by researchers at the University of Cambridge that are based on a psychological framework from the 1960s known as ‘inoculation theory’.

In broad terms, inoculation theory hypothesizes that if we think of disinformation the way we would think of a virus, then just as the exposure to a weakened pathogen triggers the production of antibodies in our system, exposing people to a weakened persuasive argument builds people’s resistance against future manipulation, potentially curbing the spread.

Bad News

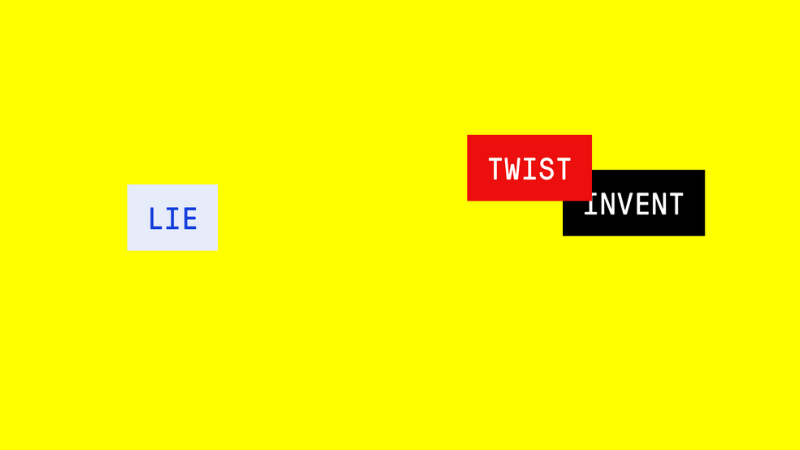

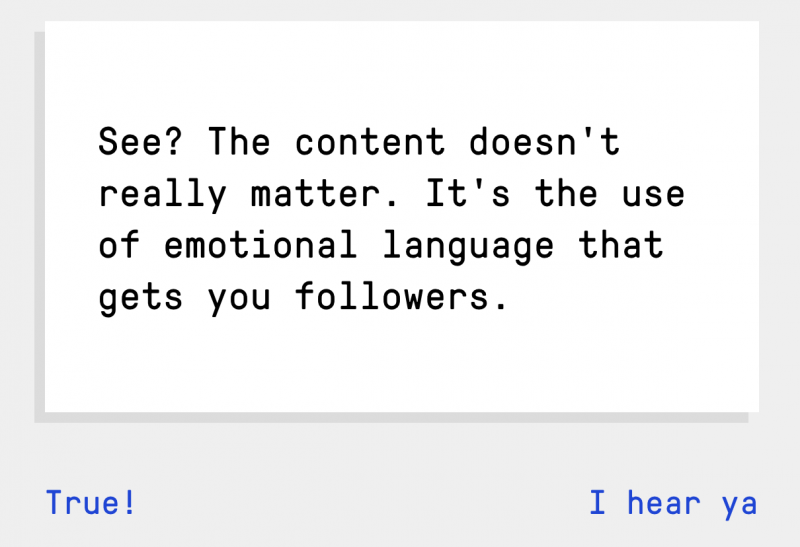

In February 2018, researchers Jon Roozenbeek and Sander van der Linden helped launch the browser game Bad News. The game asks players to take on the role of nefarious content creators who stoke anger and fear by manipulating news and social media within the simulation: they can deploy Twitter bots, photo-shop evidence, and creating conspiracy theories to attract followers – all while trying to maintain a “credibility score” for persuasiveness. Players also earn badges as the game progresses, with each badge representing one of six strategies commonly used in the spread of misinformation.

Thousands of individuals, many of whom allowed their data to be collected, then spent 15 minutes playing the game. In order to gauge the efficacy of the game, players were also asked to rate the reliability of a number of different headlines and tweets before and after they played and were randomly allocated a mixture of real (“control”) and fake news (“treatment”).

The first results were published the following year and found that the perceived reliability of fake news before playing the game had been reduced by an average of 21% after completing it. The study also found that those who registered as most susceptible to misleading/false headlines at the start of the game were the ones who benefited the most from the “inoculation.”

Although the inoculation approach is promising, it has limitations, one of them being that the positive effect of the “inoculation” fades over time. A follow-up study found that people’s ability to correctly identify manipulated information lasted approximately three months. This suggests that “booster shots” may be required to keep our brains immune to disinformation narratives over time.

Another consideration highlighted by Jon Roozenbeek when speaking to The Shift was that although the game helped people identify what misleading content might look like, it’s unclear whether inoculation games reduce polarisation, an issue that is particularly pertinent to Malta.

Screenshot of the game Bad News. Copyright ©University of Cambridge/DROG

More than one way to be bad

Although research is still ongoing, two more games have been launched. Together with BadNews, a game called Harmony Square was produced to combat election disinformation. In this game, the player’s job is to destroy a peaceful community by mounting a disinformation campaign, dividing its residents, and fuelling polarisation. If this game doesn’t sound like your average day in Malta’s public discourse, we don’t know what does.

The third game is GoViral!. This is a 5-minute game designed specifically to target the three most common Covid-19 techniques used to spread disinformation about the virus: fearmongering, fake experts, and conspiracy theories.

Games are not the only way to pre-emptively debunk (pre-bunk) misinformation. Last year, researchers at Bristol and Cambridge Universities collaborated with Jigsaw (a Google company) to develop short, animated videos to inoculate against common disinformation tropes. Twitter also applied a pre-bunking technique by placing messages at the top of feeds to pre-emptively warn of false information about voting and election results. Information/media literacy programmes continue to equip people with the tools to spot manipulative techniques.

Living with disinformation

There are a number of reasons disinformation tends to linger longer than facts. False or simply misleading content is a lot quicker to create and spread in the digital world than it is for any fact-checker to identify, examine and correct it. Misleading information couched in sensationalism is a lot easier to remember than the data used to debunk it. Add to this the tendency to judge misinformation that is repeated as true, and the whole process feels very much like a hopeless task.

It’s not, of course. Incorrect and misleading information will always warrant rectification, especially since the societal cost of disinformation cannot be ignored. Inoculation theory, therefore, is a useful and effective approach but it is not intended to replace other counter-measures or as a standalone solution.

“What we don’t want to happen is for social media giants like Facebook and Google to say ‘right – we’re going to use an inoculation theory game on all our users’ – that would not in itself be enough to solve the misinformation problem,” says Roozenbeek.

As to whether we’ll ever see a fundamental shift in how we address the problem of online disinformation, Roozenbeek says this remains to be seen. “Perhaps in the future, there will be enough science to show the scale of the harm done by online mis- and disinformation and then there might be enough political will to consider regulating social media platforms, although this is of course highly complex because you don’t want to stifle free and open debate.”

Until then, we could use a game to help ourselves by training our brains to spot the underlying tricks of manipulated information.

Featured Image by Bad News (Copyright ©University of Cambridge/DROG)